The Future of Customer Support. Are you ready to transform your Cloud Contact Center services? Explore the potential of Agent Coach and elevate your customer interactions. In this blog, we will explore the Agent Coach application that revolutionizes customer-agent interactions with real-time voice-enabled support. By leveraging cutting-edge technologies like Web Speech API and Socket.IO, it offers an intuitive interface for customers to interact through voice input during a call. Additionally, the application integrates MS Azure OpenAI API to generate instructional responses for agents based on customer queries and sentiment analysis.

Purpose: Transforming Customer Agent Interactions

The Agent Coach application has a clear mission: to enhance customer-agent interactions and streamline call support efficiency. By enabling voice input, transcribing it into text, and providing AI-generated instructional responses, it aims to optimize the communication process for a seamless and satisfying customer experience. Here’s a breakdown of the key purposes it serves within the realm of customer-agent interactions:

Customer Call Interaction:

- Enable customers to engage with the application during a call through voice input.

- Transcribe customer voice into text, providing a tangible record of the conversation.

- Offer instructional responses to guide customers through their queries and concerns.

AI-based Agent Instruction:

- Perform sentiment analysis on the customer’s call response to understand their emotional tone.

- General real-time instructional responses for agents, enhancing their ability to respond effectively.

- Infuse AI-driven insights into agent-customer interactions, optimizing the overall call experience.

Agent Call Interaction:

- Empower agents to view and respond to customer call messages using voice input.

- Transcribe agent voice into text, ensuring a clear understanding of agent-customer dialogues.

- Equip agents with instructional responses, providing real-time guidance for more impactful interactions.

Real-time Call Communication:

- Utilize Socket.IO for real-time communication between customers and agents.

- Foster a dynamic and responsive call experience, eliminating delays in message transmission.

- Ensure a fluid interaction flow, enhancing customer satisfaction and agent efficiency.

Summarization:

- Condense call conversations into concise summaries for a quick overview.

- Provide a snapshot of key points, allowing for efficient review and analysis.

- Enhance post-call processes by offering a comprehensive yet succinct record of interactions.

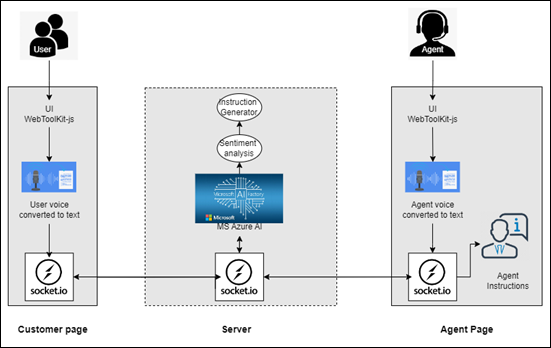

Architecture: The Engine Behind Seamless Communication

The Agent Coach program employs a client-server architecture to provide real-time customer contact help with AI-based instructional replies. The client-side components, including the customer and agent pages, use the Web Speech API to take voice input, transcribe it into text, and display it for review. The server-side handles real-time communication between customers and agents using Socket.IO, as well as integrating the MS Azure OpenAI API for sentiment analysis and creating instructive answers based on the consumer reaction and emotional kind. This design allows for smooth contact interactions, personalized help, and efficient call support, all of which improve overall customer happiness.

Integration

Integrating the components of the Agent Coach application is like weaving a seamless fabric that ties together the client-side interfaces (customer.html and agent.html) and the server-side magic happening in app.js. Let’s break it down into multiple simpler terms:

1. Client-Side Integration:

Customer.html

- Picture the customer clicking the “Start Recording” button. The Web Speech API kicks into action, capturing what the customer is saying.

- The captured voice transforms into text, landing in a cozy spot called transcriptionDiv for the customer to easily review.

- Hold on, we’re not done! This transcribed gem of a call response now embarks on a journey to app.js on the server through a WebSocket connection using Socket.IO.

Agent.html

- Now switch hats to the agent’s world. Here, the agent is glancing at customer call messages and responding using their dulcet tones.

- Like the customer’s journey, the Web Speech API is at play again, capturing the agent’s voice input and turning it into sweet, readable text in transcriptionDiv.

- But wait, there’s more! The agent’s wisdom, now transcribed, joins the pilgrimage to app.js via Socket.IO.

2. Server-Side Integration:

App.js:

- Our server-side maestro, app.js, graciously receives the transcribed messages from both customers and agents through the mystical powers of Socket.IO. It’s like a digital postman delivering messages at the server’s doorstep.

const socketIO = require(‘socket.io’); - With the messages in hand, the server puts on its thinking cap and initiates sentiment analysis on the customer’s call response. Is it happy, sad, or just chill? That’s what we’re figuring out.

- Enter MS Azure OpenAI API, the brainiac of the operation. It takes the sentiment and customer queries, cooks up some magic, and voila! Instructional responses are born. These responses are the secret sauce guiding our agents on how to navigate the call with finesse.

const {Configuration, OpenAI Api} = require(“openai”);

const configuration = new Configuration({apiKey:’your-api-key’});

const openai = new OpenAIApi(configuration);

Working Process: From voice input to real-time communication

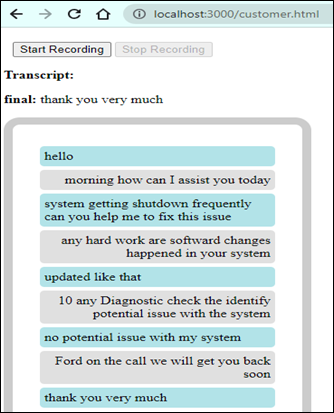

1. Getting Customer Voice Input from HTML and Transcribing using Web Speech API

The customer initiates voice recording by clicking the “Start Recording” button on the customer.html page.

recognition = new webkitSpeechRecognition();

for (let i = event.resultIndex; i < event.results.length; ++i) {

const transcript = event.results[i][0]. transcript;

if (event.results[i].isFinal) {

finalTranscript = transcript + ‘ ‘;

} else {

interimTranscript += transcript;

}

}

transcriptionDiv.innerHTML=`${interimTranscript}`;

The Web Speech API webkitSpeechRecognition() starts listening for the customer’s voice input and continuously captures the customer’s speech. The API then transcribes the customer’s voice input into text. The transcribed text is displayed in the transcriptionDiv on the customer.html page.

socket.emit(‘customerChatMessage’, finalTranscript.trim());

The customer’s transcribed message is then sent to the server-side code through a WebSocket connection using Socket.IO.

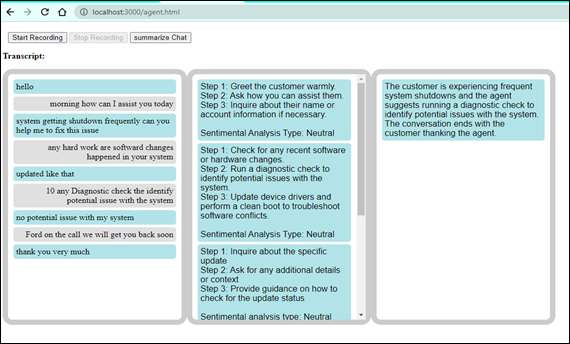

2. Performing Sentiment Analysis on Customer Response

The server-side code (app.js) receives the customer’s transcribed message and performs sentiment analysis on the text. The sentiment analysis determines whether the customer’s response is positive, negative, or neutral, based on which an appropriate agent instruction is generated.

3. Generating Agent Instruction Based on Customer Response and Sentiment Analysis

The generateResponse() function provides an informative response for the agent based on the sentiment analysis result and the customer’s enquiry. The instructional answer is intended to advise the agent on how to successfully reply to the customer. The created instruction is subsequently delivered to the agent.html page for display through Socket.IO.

const reply = await generateResponse(message);

Based on Customer Response and KB Article content

The readFileSync() function reads from the file system the contents of a file named “KBarticle.txt” and puts the text in a variable named context. This is done synchronously, which means that the script will wait until this action is finished before proceeding to the following line.

The AI reads the KB Article text and the customer inquiry, and then the generateResponse() method searches the KB article for relevant information based on the customer query and creates an appropriate answer for the agent. The answer is intended to instruct the agent on how to successfully reply to the client. The response is subsequently delivered to the agent.html page for display using Socket.IO.

const context = fs.readFileSync(“./KBarticle.txt”, “utf8”);

const reply = await generateResponse(message, context);

4. Getting Agent Voice Input from HTML and Transcribing using Web Speech API

By clicking the “Start Recording” button on the agent.html page, the agent begins voice recording. webkitSpeechRecognition() begins listening for voice input from the agent and constantly collects the agent’s speech. The API then converts the agent’s spoken input into text. The transcriptionDiv on the agent.html page displays the transcribed text.

recognition = new webkitSpeechRecognition();

for (let i = event.resultIndex; i < event.results.length; ++i) {

const transcript = event.results[i][0]. transcript;

if (event.results[i].isFinal) {

finalTranscript = transcript + ‘ ‘;

} else {

interimTranscript += transcript;

}

}

transcriptionDiv.innerHTML=`${interimTranscript}`;

The agent’s transcribed message is then sent to the server-side code (app.js) through a WebSocket connection using Socket.IO.

socket.emit(‘agentChatMessage’, finalTranscript.trim());

5. Real-time Communication using Socket.IO

The server-side code (app.js) gets the transcribed message from the agent and transmits it to the customer.html page through Socket.IO. Similarly, the server receives the AI-generated instructive answer based on the customer’s message and sentiment analysis. Socket.IO is used to send the instructive answer to the agent.html page. Because messages and answers are created in real time, both the customer and the agent may receive and view them.

socket.on(‘agentChatMessage’, async (message) => {

io.emit(‘agentMessage’, message);

});

socket.on(‘customerChatMessage’, async (message) => {

io.emit(‘customerChatMessage’, message);

const reply = await generateResponse(message);

io.emit(‘agentInstructor’, reply);

});

6. Summarization

The summarizeChat() function summarizes the discussion based on the customer’s questions and the agent’s replies. The synopsis offers an overview of the encounter.

socket.on(‘summarizeChat’, async (message) => {

const reply = await summarizeChat(fullChat);

});

Output View

Customer Page(customer.html)

Agent Page(agent.html)

Conclusion

The Agent Coach application improves customer service by offering real-time chat with voice input support. Agents may deliver effective and personalized help to customers by leveraging the Web Speech API for voice-to-text conversion, Socket.IO for real-time communication, and the MS Azure OpenAI API for AI-based instructive responses. Agent Coach is a helpful tool for increasing customer-agent interactions and overall customer satisfaction due to its combination of contemporary web technologies and AI instruction creation. Contact us at Sensiple for more information and to unlock the potential of Agent Coach for your business. Contact Us to get started.

About the Author:

Kannan Kumar is a Developer at Sensiple with 1 year of experience in the Contact Center practice. He is well-versed in the java and has handled an array of tasks in Nerve Framework using Node JS.

Kannan Kumar is a Developer at Sensiple with 1 year of experience in the Contact Center practice. He is well-versed in the java and has handled an array of tasks in Nerve Framework using Node JS.